About 3 years ago I wrote

this "blockbuster" blog post about what I saw as research "culture wars" within the database/data management/data systems (DB) community. If you have not read that post, please go read it first--I promise you won't be bored. :) I am now back to re-examine the state of affairs. As before, my motivation is to offer a contemplative analysis, especially for the benefit of students and new researchers, not prescribe conclusive takes.

TL;DR: My post was read in a wide array of research communities. The reactions were overwhelmingly affirmative. In just 2-3 years, SIGMOD/VLDB made praiseworthy and clinically precise changes to their peer review processes to tackle many issues, including the ones I spoke about. To my own (pleasant) surprise, I now think the grand culture wars of DB Land have ended. Not only did SIGMOD/VLDB not disintegrate, I believe they breathed new life into themselves to remain go-to venues for data-intensive computing. I explain some of the changes that I believe were among the most impactful. Of course, there are always more avenues for continual improvement on deeper quality aspects of reviews. I also offer my take on how the fast-growing venue, MLSys, is shaping up. Finally, as I had suspected in my previous post, the set of

canonical DB cultures has grown to add a new member: The Societalists.

A Brief History of DB Culture Wars and My Blog Post

Circa 2017, the DB research community faced an identity crisis on "unfair" treatment of "systemsy" work at SIGMOD/VLDB. Thanks to some strategic brinkmanship by some leading names in DB systems research, the issue came to the attention of the wider DB research community. There were fears of a new

Balkanization in DB land. Into this vexed territory walked I, a new faculty member at UC San Diego, just 1.5 years in. Concerned by the prospect of a prolonged civil war, I wrote my blog post to offer my unvarnished take on what I saw as the heart of the issue:

lack of appreciation in peer review for the inherent multiculturalism of the DB area.

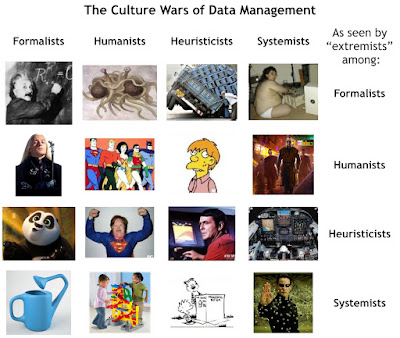

After much thought, I systematically decomposed "core" DB research into 4

canonical cultures. These are

not based on the topics they study. Rather, they are set apart by their main progress metrics, their methodologies, technical vocabularies, nearest neighbors among non-DB CS areas, and overall style/content of their papers.

As I noted,

hybridization among these cultures has

always been common in the DB area and that continues to be strong. For instance, Systemist+Formalist and Systemist+Humanist hybrids are popular. However, SIGMOD/VLDB had sleepwalked into a peer review situation where people were made to review papers across cultures they were not trained to evaluate fairly. The analogy I gave was this: asking a random SODA (or KDD) reviewer to evaluate a random OSDI (or CHI) paper. I ran my post by multiple DB folks, both junior and senior. Many shared most of the concerns.

Curiously, some junior folks did caution me that my post may land me in trouble with the "powers that be." I brushed that off and went public anyway. :) In hindsight, I think I did so in part because the issue was so pressing and because my message was (IMO) important for raising awareness.

I was blown away by the reactions I got after publishing and tweeting about my post. Many young researchers and students in the DB area affirmed my post's message. Folks from many nearby areas such as NLP, Data Mining, Semantic Web, and HCI emailed me to thank me for writing it. Apparently, their areas had faced the same issue. They recalled peer review mechanisms their areas' venues had adopted to mitigate this issue. Remarkably, I even got affirmative emails from folks in disciplines far away from CS, including biology, public health, and physics!

Coincidentally, I was invited to speak at the

SIGMOD 2018 New Researcher Symposium. I made this topic as a key part of

my talk. There were raised eyebrows and gasps, of course, but the message resonated. In the panel discussion, I noted how SIGMOD/VLDB are no longer the sole center of the data universe and how they must compete well against other areas' venues, e.g., NSDI and NeurIPS. Interestingly, the head of SIGMOD was at the panel too and addressed the issues head-on. This matter was also discussed at the SIGMOD business lunch. Clearly, SIGMOD/VLDB were siezed of this matter. That was encouraging to me.

Fascinatingly, never once did I hear from anyone that what I wrote hurt the "reputation" of SIGMOD/VLDB. I was still invited to SIGMOD/VLDB PCs every year. Indeed, one my ex-advisors,

Jignesh Patel, had offered public comment on my post that the DB community has always welcomed constructive criticism. Clearly he was proven right.

Key Changes to SIGMOD/VLDB Peer Review Processes

Over the last few years, I got multiple vantage points to observe the PC process changes introduced by SIGMOD/VLDB and assess their impact. The first vantage point is as a reviewer: I served on the PCs of both SIGMOD and VLDB back-to-back for all of the last 3-4 years. The second is as an author: I have been consistently submitting and publishing at both venues all these years. The third is as an Associate Editor (metareviewer) for VLDB'21. I did not play a role in any decision making on these changes though. I now summarize the key changes that I believe were among the most helpful. I do not have access to objective data; my take is based on the (hundreds of) examples I got to see.

- Toronto Paper Matching System (TPMS):

In the past, papers were matched primarily by manual bidding based on titles/abstracts, an error-prone and unscalable process. TPMS directly tackled the most notorious issue I saw due to that flawed matching process: mismatch between the expertise/culture of a reviewer and the intellectual merits of a given paper. The pertinence and technical depth of reviews went up significantly.

- Naming the Research Cultures:

In the past, reviewers may misunderstand and fight over papers that were hybrids or from cultures other than their own. SIGMOD gave authors the option to explicitly label their paper as Systemist. VLDB went further and delineated multiple "flavors" of papers, straddling "foundations and algorithms", "systems", and "information architectures". Reviewers are also asked to identify these so that during discussion they can ensure that a fish is not judged by its ability to climb a tree.

- More Policing and Power to Metareviewers:

Any community needs policing to lay down the law. Peer review is no exception. In the past, I have seen uncivil terms like "marketing BS" and culture war terms like "just engineering" and "too much math" in reviews of my papers. SIGMOD/VLDB heads and PC Chairs of the last few years went the extra mile in creating more thorough and stricter guidelines for reviewers. Metareviews/Associate Editors were empowered to demand edits to reviews if needed. The civility, fairness, and technical focus of reviews went up significantly.

Of course, deeper quality issues will likely never disappear fully. SIGMOD/VLDB can benefit from continual monitoring and refinement of these aspects. There is always room for improvement. Innovative ideas on this front must be welcomed. But having submitted papers to SOSP, MLSys, ICML, and KDD, it is clear to me that no venue has solved this problem fully. Different research communities must be open-minded to learn from each others' processes.

Amazingly, the above changes were achieved without major surgery to the PC structure. Both SIGMOD and VLDB retained a monolithic PC instead of a federated approach for the different cultures, which some other areas' venues follow. In case of contentious or low-confidence reviews, extra reviewers were added elastically. Full PC meetings, which some other areas' venues follow, turned out to be not really needed after such extra oversight was added. But as a disclaimer, I have not seen other areas' venues as an insider, except for serving on the PC of MLSys/SysML twice. I now think full PC meetings are likely an overkill, especially for multicultural areas like DB. That said, I may not know of peculiar issues in other areas that make them employ federated PCs, full PC meetings, etc.

None of the above would have been possible without the phenomenal efforts of the PC chairs of SIGMOD/VLDB over the last few years and the executive boards. I got to interact often with

quite a few PC chairs. I was amazed by how much work it was and how deeply they cared about fixing what needed to be fixed for the benefit of all stakeholders: authors, reviewers, and metareviewers.

Healthy Competition and Peacetime DB Boom

So,

why did SIGMOD/VLDB evolve so rapidly? Well, apart from the banal deontological answer of "right thing to do" I contend there is also a fun utilitarian answer: competition! :) Both Darwinian evolution and Capitalism 101 teach us that

healthy competition is helpful because it punishes complacency and smugness, spurs liveliness and innovation, and ultimately prevents stagnation and decay. This is literally why even the stately NSF dubs proposal calls "competitions." Research publication venues are not exempt from this powerful phenomenon.

I suspect SIGMOD/VLDB had an "uh oh" moment when SysML (now MLSys) was launched as a new venue for research at the intersection of computing systems and ML. In fact, this matter was debated at

this epic panel discussion at the DEEM Workshop at SIGMOD'18. The DEEM sub-community is at the intersection of the interests of SIGMOD/VLDB and MLSys. Later I attended the first archival SysML in 2019 and

wrote about my positive experience as an attendee afterward. Full disclosure: I was invited, as a PC member, to be a part of

its founding whitepaper.

MLSys is undoubtedly an exciting new venue with an eclectic mix of a variety of CS areas: ML/AI, compilers, computer architecture, systems, networking, security, DB, HCI, and more, although the first 4-5 areas in this list seem more dominant there. But having served on its PC twice and submitted once, it is clear to me that it is a work in progress. It has the potential to be a top-tier venue, its stated goal. But I am uncertain how it can escape the local optimum that its closest precedent, SoCC, is stuck in. Most faculty in the systems, DB, and networking areas I know still do not view a SoCC paper as on par with an SOSP/SIGMOD/SIGCOMM paper. MLSys also seems "overrun" by the Amazons, Googles, and Facebooks of the world. While industry presence is certainly important, my sense is the "cachet" of a

research publication venue is set largely by academics based on longer-term scientific merits, not industry peddling their product features. Unless, of course, some papers published there end up being highly influential scientifically. I guess time will tell. Anyway, healthy competition is welcome in this space. Even the systems area has new competition:

JSys, modeled on PVLDB and JMLR.

Apropos competition from MLSys, SIGMOD/VLDB did not sit idly by. VLDB'21 launched its "counter-offensive" with the exciting new

Scalable Data Science (SDS) category under the Research Track. Data Science, which has a broader scope than just ML, is widely regarded in the DB world and beyond as an important interdisciplinary arena for DB reseach to amplify its impact on the world. A key part of SDS rationale is to attract impactful work on data management and systems for ML and Data Science. OK, full disclosure again: VLDB roped me in as an inaugural Associate Editor of SDS to help shape it for VLDB'21. And I am staying on in that role for VLDB'22 as well. SIGMOD too has something similar now. It is anyone's guess as to how all this will play out this decade. But I, for one, am certainly excited to see all these initiatives across communities!

The above said, based on recent SIGMOD/VLDB/CIDR I attended, it is clear to me that "peacetime" among the DB cultures has also ushered in a new research boom. Apart from the "DB for ML/Data Science" line I mentioned above, the reverse direction of "ML for DB" is also booming. Both of these lines of work are inherently cross-cultural: Systemist+Heuristicist, Formalist+Heuristicist, Systemist+Heuristicist+Humanist, etc. Cloud-native data systems, data lake systems, emerging hardware for DBMSs, etc. are all booming areas. Much of that work will also be cross-cultural, involving theory, program synthesis, applied ML, etc. All will benefit from the multiculturalism of SIGMOD/VLDB.

A Fifth Canonical Culture: The Societalists

I concluded my "culture wars" post by noting that new DB cultures may emerge. It turns out a small fifth culture was hiding in plain sight but it is now growing: The Societalists. I now explain them in a manner similar to the other cultures.

The Societalists draw inspiration from the fields of humanities and social sciences (ethics, philosophy, economics, etc.) and law/policy but also logic, math, statistics, and theoretical CS. This culture puts the well-being of

society at the center of the research world, going beyond individual humans. They differ from Humanists in that their work is largely moot if the world had only one human, while Humanist work is still very useful for that lone human. Popular topics where this culture is well-represented are data privacy and security, data microeconomics, and fairness/accountability/responsibility in data-driven decision making. Societalists study phenomena/algorithms/systems that are at odds with societal values/goals and try to reorient them to align instead. Many Societalists blend this culture with another culture, especially Formalists and Heuristicists. Common elements in Societalist papers are terms such as utility, accuracy tradeoffs, ethical dilemmas, "impossibility" results, bemoaning the state of society, and occasionally, virtue signalling. :) Societalist papers, not unlike Humanist papers, sometimes have multiple metrics, including accuracy, system runtime, (new) fairness metrics, and legal compliance. Many Societalists also publish at FAccT, AAAI, and ICML/NeurIPS.

This culture is now growing, primarily by attracting people from the other DB cultures, again akin to Humanists. I believe the fast-growing awareness of the importance of diversity, equity, and inclusion issues in CS and Data Science is contributing to this growth. Also important is the societal aspiration, at least in some democracies, of ensuring that data tech, especially ML/AI but also its intersection with DB, truly benefit all of society and do not cause (inadvertent) harm to individuals and/or groups, including those based on attributes with legal non-discrimination protection.

Since the grand culture wars of DB Land are now over (hopefully!), I do not see the need to expand my 4x4 matrix to 5x5--yay! But I will summarize the 5 canonical cultures with their most "self-aggrandizing" self-perception here for my records. :)

Concluding Remarks

I am quite impressed by how rapidly SIGMOD/VLDB evolved in response to the issues they faced recently, especially research culture wars. I believe they have now set themselves up nicely to house much of the ongoing boom in data tech research. They have shown that even "old" top-tier venues can be nimble and innovative in peer review processes. Well, VLDB already showed that a decade ago with its groundbreaking (for CS) one-shot revision and multi-deadline ideas. Some of that has influenced many other top-tier and other venues of multiple CS areas: UbiComp, CSCW, NSDI, EuroSys, CCS, Oakland, Usenix Security, NDSS, CHES, and more. Elsewhere the debate continues, e.g., see

this blog post on ML/AI venues and

this one on systems venues. Of course, eternal vigilance is a must to ensure major changes do not throw the baby out with the bathwater and also to monitor for new issues. But I, for one, think it is only fitting that venues that publish science use the scientific method to experiment with potentially highly impactful new ideas for their peer review processes and assess their impact carefully. Build on ideas that work well and discard those that do not; after all, isn't this how science itself progresses?

ACKs: I would like to thank many folks who gave me feedback on this post, including suggestions that strengthened it. An incomplete list includes Jignesh Patel, Joe Hellerstein, Sam Madden, Babak Salimi, Jeff Naughton, Yannis Papakonstantinou, Victor Vianu, Sebastian Schelter, Paris Koutris, Juan Sequeda, Julian McAuley, and Vijay Chidambaram. My ACKs do not necessarily mean they endorse any of the opinions expressed in this post, which are my own. Some of them have also contributed public comments below.

Reactions (Contributed Comments)

This post too elicited a wide range of reactions from data management researchers. Some have kindly contributed public comments to register their reactions here, both with and without attribution. I hope all this contributes to continuing the conversations on this important topic of peer review processes in computing research venues.

Jignesh Patel:

This looks good. One comment is that labels are what they are--sometimes too defining and unintentionally polarizing. Having said that, two key comments:

1. Increasingly we have researchers in our community who span across even these labels. This is a really good sign.

2. The view here is somewhat narrow of what the "world of research" looks like and does not include collaborations across fields like biology and humanities. Systemist, in your terminology here, are generally the ones that bridge outside the CS field for databases and that is quite crucial if you take a large societal perspective.

Keep on blogging.

Joe Hellerstein:

Enjoyable read!

One of the reasons I went into databases was that it's a crosscut of CS in its nature. It was driven from user needs, math foundations and system concerns from the first. And more recently into societal-scale concerns as you wisely point out. I think this makes our field more interesting and broad than many. And in some sense it underlies a lot of what you're writing about.

It has some downsides. We're sometimes "ahead of our time" and perceived as nichey, then later have to suffer through mainstream CS rediscovering our basic knowledge later. And we can get diffuse as a community, as you've worried about in the past. But on balance I agree that times are pretty good and we're lucky to have had some community leaders innovate on process. PVLDB was a big win in particular.

Another thought. One viewpoint that's perhaps missing from your assessment is the longstanding connection between industry and academia in our field. This is much more widespread in CS now, but it's deep in our academic culture and continues to be a strength. Fields that have found applicability more recently could probably learn from some things we do in both directions.

Third thought: there's an underappreciated connection between databases and programming/sw-engineering/developer experience. Jim Gray understood this: e.g., the transaction concept as a general programming contract outside the scope of database storage and querying per se. State management is at the heart of all hard programming problems, and we have more to give there than I think we talk about. This is of increasing interest to me.

Sam Madden:

I do agree that review quality is up in the DB community--reviews are longer and more detailed--although I am still frustrated by the number of reviewers insisting on pseudoformalisms in systems papers (having just struggled with one of my students to add such nonsense in response to a reviewer comment).

I agree that going away from bidding to a matching system has been important, but from my point of view the single biggest innovation has been the widespread acceptance of a frequent and extensive revision process--reviewers are much more likely to give revisions. Having (mostly unsuccessfully) submitted a bunch of ML papers in the last two years, the SIGMOD/VLDB process is MUCH better, despite those ML papers using a similar review matching system. I attribute this largely to the revision process.

Babak Salimi:

I found it fascinating the way you broke down the research cultures in the SIGMOD/VLDB community into four tribes in your old post. Also, I appreciate the fact that you acknowledged the advent of an emerging line of work in databases that is society-centered.

Ever since I've started publishing at and reviewing for SIGMOD/VLDB in 2018, the key changes in the reviewing process you referred to were in place. So I can only imagine the mind-blowing scale of the culture war in the DB community prior to that time. While I can see those changes played a constructive role in deescalating the war, based on my anecdotal experiences, I think the war is far from over. As a matter of fact, each of these, now, five tribes have their own subcultures and communities. There is huge difference between expectations of a Societalist-Formalist reviewer, than a Societalist-Heuristicist one. I still see reviewers butcher submissions based on these cultural differences. I see decent submissions with novel and interesting contributions treated unfairly because of lack of theoretical analysis (read it as w/o theorems); I see submissions that look into problems with little practical relevance cherished because they are full of nontrivial theorems; I see interesting foundational papers rejected because of lack of evidence of scalability/applicability; I see impactful system-orianted papers referred to as a bundle of engineering tricks or amalgamate of existing ideas.

The steps taken to mitigate the situation were crucial and effective, but I think the war is still ongoing, but maybe now within these subcultures. To alleviate the problems, we need to go beyond refining the submission distribution policy. We need to educate the reviewers with regard to these cultural differences and provide them with detailed rubrics and guidelines to make the reviewing process more objective. More importantly, we need to devise a systematic approach to evaluate the review qualities and hold reviewers accountable for writing irresponsible and narrow-minded reviews.

Juan Sequeda:

I'm thrilled to see the changes going on at SIGMOD/VLDB which serve as an example for other CS communities. I also agree on the Societalist as a new culture. I'm eager to see the outcomes of researchers mixing within these cultures. In particular, I'm interested in a Humanist + Societalist mix because that is going to push data management to unchartered territories.

Julian McAuley:

As an outsider I wasn't aware of the "culture wars" outside of your posts. Reviewers having different views on things or coming from different backgrounds sounds pretty typical of many communities and usually isn't a catastrophic event. Sometimes it leads to the formation of new conferences (e.g. ICLR) though to call that a "war" is a bit of a stretch. Maybe I just find the term "war" overused (e.g. "War on X") but I understand that's a term you've used previously and the intention is to cause a stir.

I mostly think twitter/blogs are quite separate from "real life". I am incredulous that anyone would warn you that blogging about something would risk your professional reputation (at least for what seems like a fairly innocuous opinion)! Twitter (for e.g.) is full of people with deliberately controversial/outrageous/inflammatory opinions, most of which are just separate from their professional life. Maybe that one Googler got fired for writing about innate gender differences or whatever but I can't really think of any academics who've suffered negative career consequences from blogs / tweets. Long story short I maybe wouldn't sound so surprised that the community didn't implode and that your career wasn't left in shambles!

Does part of this succumb to "the documentary impulse", i.e., the human desire to form narratives around events? The alternative is simply that the community shifted in interests, some new conferences formed, and reviewing practices changed a little (as they do frequently at big conferences). Again maybe I'm put off by the "war" term. Of course I'm being too dense: I realize that it's a blog and that establishing a narrative is the point. Take, e.g., NeurIPS: old conference, various splinter conferences have emerged, the review process changes every year in quite experimental ways. The makeup of the community has also changed drastically over the years. Would you say ML is undergoing or has undergone a similar culture war? If it has it's been pretty tame and never felt like an existential threat.

Likewise, what's the evidence that it's over? I'm sure in the next three years there'll be some new splinter conferences, and some more changes to the review procedure. Being in a state of constant change seems like the norm.

Anonymous:

I personally think that reviewing can still be improved a lot at most conferences, so I wouldn't try to imply that it's "fixed", but it's hard to make it much better without a lot of work for people in the community. One of the ways to improve it would be to have more reviewers per paper, but that's obviously more work for the reviewers, so they have to agree to do it. Another way is to have more senior people as reviewers.

My most informative reviews are usually from SOSP/OSDI/NSDI/SIGCOMM, who have 5-6 reviewers per paper for papers that make it past the "first round", but those conferences are also a lot of work for the people involved, and it's questionable whether this extra reviewing really improves the quality of the papers overall. Maybe a faster-and-looser approach is better for the field. In any case though, it's been great to see VLDB and SIGMOD move toward these explicit tracks and use TPMS.